PyTorch is a small part of a computer software which is based on Torch library. It is a Deep Learning framework introduced by Facebook. PyTorch is a Machine Learning Library for Python programming language which is used for applications such as Natural Language Processing.

The high-level features which are provided by PyTorch are as follows:

- With the help of the Graphics Processing Unit (GPU), it gives tensor computing with strong acceleration.

- It provides Deep Neural Network which is built on a tape-based auto diff system.

PyTorch was developed to provide high flexibility and speed during implementing and building the Deep Learning Neural Network. As you already know, it is a machine learning library for Python programming language, so it’s quite simple to install, run, and understand. Pytorch is completely pythonic (using widely adopted python idioms rather than writing Java and C++ code) so that it can quickly build a Neural Network Model successfully.

History of PyTorch

PyTorch was released in 2016. Many researchers are willing to adopt PyTorch increasingly. It was operated by Facebook. Facebook also operates Caffe2 (Convolutional Architecture for Fast Feature Embedding). It is challenging to transform a PyTorch-defined model into Caffe2. For this purpose, Facebook and Microsoft invented an Open Neural Network Exchange (ONNX) in September2017. In simple words, ONNX was developed for converting models between frameworks. Caffe2 was merged in March 2018 into PyTorch.

PyTorch makes ease in building an extremely complex neural network. This feature has quickly made it a go-to library. In research work, it gives a tough competition to TensorFlow. Inventors of PyTorch wants to make a highly imperative library which can easily run all the numerical computation, and finally, they invented PyTorch. There was a big challenge for Deep learning scientist, Machine learning developer, and Neural Network debuggers to run and test part of the code in real-time. PyTorch completes this challenge and allows them to run and test their code in real-time. So they don’t have to wait to check whether it works or not.

Note: To use the PyTorch functionality and services, you can use Python packages such as NumPy, SciPy, and Cython.

Why use PyTorch?

Why PyTorch? What is special in PyTorch which makes it special to build Deep learning model. PyTorch is a dynamic library. Dynamic library means a flexible library, and you can use that library as per your requirements and changes. At present in Kaggle competition, it is continuously used by finishers.

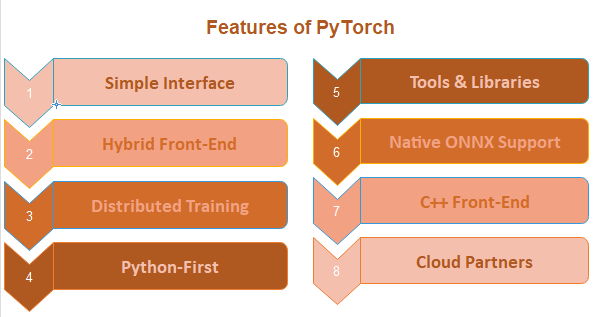

There are so many features which makes deep learning scientist to use it in making Deep learning model.

These features are as follows.

Simple interface

PyTorch has a very simple interface like Python. It provides an easy way to use API. This framework is very easy to run and operate like Python. PyTorch can easily understand or implement on both Windows and Linux.

Hybrid Front-End

PyTorch provides a new hybrid front-end which provides flexibility and ease of use in eager mode, while originally transition to graph mode for speed, optimization, and functionality in C++ runtime environment.

For example:

@torch.jit.script

def Rnn(h, x, Wh, Uh, Wy, bh, by):

y = []

for t in range(x.size(0)):

h = torch.tanh(x[t] @ Wh + h @ Uh + bh)

y += [torch.tanh(h @ Wy + by)]

if t % 10 == 0:

print("stats: ", h.mean(), h.var())

return torch.stack(y), hDistributed Training

PyTorch allows developers to train a neural network model in a distributed manner. It provides optimized performance in both research and production with the help of native support for peer to peer communication and asynchronous execution of collective operation from Python and C++.

For example:

- import torch.distributed as dist1

- from torch.nn.parallel import DistributedDataParallel

- dist1.init_process_group(backend='gloo')

- model = DistributedDataParallel(model)

Python-First

PyTorch is completely based on Python. PyTorch is used with most popular libraries and packages of Python such as Cython and Numba. PyTorch is built deeply into Python. Its code is completely pythonic. Pythonic means using widely adopted Python idioms rather than writing java and C++ code in your code.

For example:

import torch

import numpy as np

x = np.ones(5)

y = torch.from_numpy(x)

np.add(x, 1, out=x)

print(x)

print(y)Tools and Libraries

A rich ecosystem of tools and libraries are available for extending PyTorch and supporting development in areas from computer vision and reinforcement learning. This ecosystem was developed by an active community of developers and researchers. These ecosystems help them to build flexible and fast access Deep learning neural network.

For example:

import torchvision.models as models

resnet18 = models.resnet18(pretrained=True)

alexnet = models.alexnet(pretrained=True)

squeezenet = models.squeezenet1_0(pretrained=True)

vgg16 = models.vgg16(pretrained=True)

densenet = models.densenet161(pretrained=True)

inception = models.inception_v3(pretrained=True)Native ONNX Support

ONNX was developed for converting models between frameworks. For direct access to ONNX-compatible platforms, runtimes, visualizers, and more, you need to export models in the standard ONNX.

For example:

import torch.onnx

import torchvision

dum_input = torch.randn(1, 3, 224, 224)

model = torchvision.models.alexnet(pretrained=True)

torch.onnx.export(model, dum_input, "alexnet.onnx")C++ Front-End

The C++ front-end is a c++ interface for PyTorch which follows the design and architecture of the established Python frontend. It enable research in high performance, low latency and bare metal C++ application.

For example:

- #include <torch/torch.h>

- torch::nn::Linear model(num_features, 1);

- torch::optim::SGD optimizer(model->parameters());

- auto data_loader = torch::data::data_loader(dataset);

- for (size_t epoch = 0; epoch < 10; ++epoch)

- {

- for (auto batch : data_loader)

- {

- auto prediction = model->forward(batch.data);

- auto loss = loss_function(prediction, batch.target);

- loss.backward();

- optimizer.step();

- }

- }

Cloud Partners

PyTorch is supported by many major cloud platforms such as AWS. With the help of prebuilt images, large scale training on GPU’s and ability to run models in a production scale environment etc.; it provides frictionless development and easy scaling.

For example:

- export IMAGE_FAMILY="pytorch-latest-cpu"

- export ZONE="us-west1-b"

- export INSTANCE_NAME="my-instance"

- gcloud compute instances create $INSTANCE_NAME \

- --zone=$ZONE \

- --image-family=$IMAGE_FAMILY \

Leave a Reply